You've gotta be doing something.

An earlier post mentioned my "go-in" position is assuming that any successful company has to be doing something in many of the process areas, else they wouldn't be successful in general, let alone in using CMMI to improve their processes.

It might be helpful to explain myself a little in a way that might be illuminating for the benefit of anyone else.

CMMI is written in the language of process-improvement-speak. What I learned early in my career is that process-improvement-speak rarely speaks to decision-makers or others in positions of authority over "real" work. It seems that this holds true for software developers as well regardless of where they are in the corporate food chain.

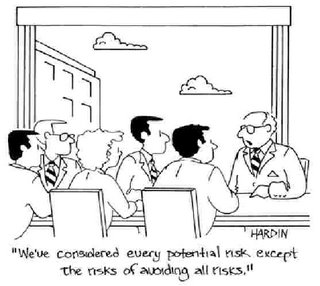

What I learned that *does* speak very loudly and clearly to those who hold sway over what gets attention -- as well as those who are doing the heavy lifting -- is the discussion of risk.

When a risk becomes an issue, it usually involves loss of resources, time, and money. It's the money piece that grabs the attention of most "gold owners" (aka, "decision-makers"). Well, whether it's time or resources, it usually translates into money anyway at the top. At the "bottom" time and resources are the focus. Regardless, if you want to really grab attention talk about your project failing and you will get attention.

In fact, every goal and practice in the CMMI avoids some risk. Not every risk CMMI avoids will lead to eminent danger or failure, but left alone, many risks avoided by CMMI could eventually lead to failure or loss. From another perspective, many CMMI practices are "early warning signs" of risk.

This is why I believe that most organizations are likely doing many of the things needed to implement CMMI practices. If they weren't avoiding the same risks CMMI is concerned about, their projects would fail more often, and, they wouldn't be in business long enough to be thinking about CMMI.

Whether an organization is using agile or traditional methods, they are likely working against risk. With CMMI working to avoid the same risks as agile and traditional developers, it stands to reason that we ought to be able to find how those risks are avoided in each organization's view of reality. We can then see how/where CMMI practices can be incorporated to improve the performance of that risk-avoidance reality.

What I normally find is that the basic efforts to avoid the risk are in place. What's often missing are some of the up-front activities that cause these risk-avoiding behaviors to be consistently applied from project to project, and, some of the follow-through activities that close the loop on being able to actually improve these risk-avoidance activities. Usually, once exposed to the few practices that can help the organization improve upon their already decent risk-avoidance efforts, they welcome the reasonable suggestions.

What's more often missing is the distinction that the previous successes experienced by the team (or organization) relied heavily on certain people and their experience and their natural or learned know-how to ensure risks are avoided. This is fine in CMMI -- if that's all that is expected. Accordingly, this is called "Capability Level 1". However, for most organizations, they want more than just achievement of the Specific Goals through the performance of Specific Practices. They want to institutionalize that performance; meaning, they want it to be consciously managed and available to be distributed throughout their group, applied with the purpose of producing consistently positive outcomes, and improved via collective experience.

The first step in that journey is to manage the risk-avoidance-and-improvement practices. That's called "Capability Level 2". After that, we get into really taking a definitional and introspective approach towards institutionalization and improvement in "Capability Level 3".

Usually, once agile teams see that many practices are accomplished as a natural outcome of risk-avoidance, and, that practices not already inherent in what they're doing aren't unreasonable, staying agile while also improving their practices using CMMI is quite a non-event.

As projects get bigger and/or more complex, and, as teams get bigger, the mechanisms to manage risk are necessarily more comprehensive. If you're a small, agile, yet disciplined organization, most risk-avoiding activities are natural. The question is whether they are scalable. If you're such a company, you might look at the additional activities as building-out the infrastructure to be bigger. Not less agile.

Bottom line: translate CMMI practices into risk-avoidance and you will find the value in them as well as the means to accomplish them in a lean and agile way.

(Thanks to http://wilderdom.com/Risk.html for the great images!)

Labels: risk

-200.jpg)